Top 10 IT Software companies in Vizag

Posted on January 02, 2026

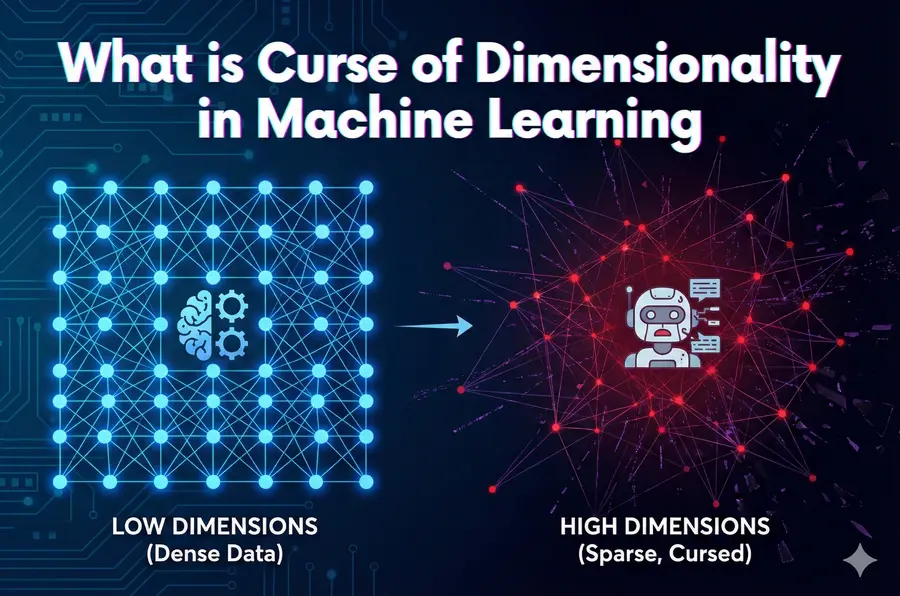

Today, high-dimensional data is everywhere. Modern systems are based on thousands of data points simultaneously, whether it is AI-driven applications and smart devices, medical scans, or financial models. This rapid growth of features is where the curse of dimensionality in machine learning quietly starts affecting performance.

Scattered data, weaker relationships, and models that have difficulty learning what matters are all effects of increased dimensions. In the process of becoming more accurate, algorithms tend to get slower and more expensive, and more likely to overfit.

The volume of data in the world is growing exponentially – the world datasphere is estimated to grow to some 181 zettabytes by the end of 2025, which is fuelled by IoT, video and cloud-native systems. This sheer size implies that high-dimensional data is a critical issue in its management.

To find out why it occurs, it is first important to take a clear look at the concept of dimensionality and its influence on the learning space of machine learning models.

The curse of dimensionality is a problem that happens when we work with too many features or too many inputs in machine learning.

In simple words, curse of dimensionality means that as we add more and more features, machine learning models find it harder to learn, need a lot more data, and often perform worse instead of better.

When you have only one or two features, the data is easy to look at, compare, and understand. But when the number of features keeps increasing (10, 50, 100, or more), things start becoming confusing for the model.

The data spreads out, patterns become harder to find, and the computer needs a lot more data and time to learn anything useful.

The curse of dimensionality occurs when the number of features in a machine learning problem keeps increasing. As more features are added, the space in which the data exists becomes larger.

The data then spreads out and becomes thinly distributed, which makes it much harder for the model to find clear patterns. Because of this larger space, the same amount of data that once felt enough suddenly becomes too small.

The model now requires a much bigger dataset to properly understand the relationships in the data.

Another reason it occurs is that the distance between data points increases as dimensions grow. Many machine learning algorithms depend on measuring how close or far data points are from one another.

In high dimensions, almost all points become far apart, so the idea of similar or nearby loses its meaning. This also leads to very sparse data space.

Even though the feature space becomes huge, real data occupies only a tiny portion of it, leaving most areas empty

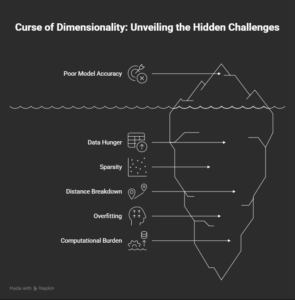

The high-dimensional data presents several difficulties that directly affect the performance and viability of models.

Data sparsity is experienced when the available samples do not provide a sufficient sample to fill the expanded feature space. Consequently, models do not have sufficient reference points in their immediate surroundings to allow them to make accurate forecasts.

As the dimensionality increases, distance measurements become useless since all points start to appear the same distance away. This has a very detrimental effect on algorithms like K-Nearest Neighbors and clustering models.

The cost of computation is also out of this world. Increased features consume more memory, take more time to execute, and cost more in terms of infrastructure.

Large dimensional data sets also promote overfitting, in which a model learns noise rather than the actual patterns, into which it will generalize poorly on unseen data.

Lastly, memory usage becomes a significant bottleneck, particularly on deep learning pipelines and in real-time.

When the number of features increases, the amount of data required also increases sharply. With only a few features, even a small dataset is enough for a model to learn patterns. But as dimensions grow, the model has to explore a much larger space.

If enough data is not available, the model guesses more than it learns, which leads to poor accuracy.

In high dimensions, data points do not stay close together. They spread out and leave most of the space empty. This empty space makes learning difficult because the model does not find enough nearby points to understand what is happening around them.

When data becomes sparse like this, patterns become weak and unclear. Which directly affect performance.

Many machine learning models depend on the concept of nearness for example, finding the closest neighbours or grouping similar points.

In higher dimensions, almost every point becomes far from every other point. The difference between “near” and “far” almost disappears. As a result, distance no longer gives useful information.

Methods that rely on distance, such as clustering or k-nearest neighbours, stop working effectively.

When there are too many features, the model becomes more complex than needed. It gets enough flexibility to fit even small random fluctuations in the data.

Instead of learning real patterns, it starts memorizing noise. The model may show very high accuracy on training data but performs badly on new data. This gap between training and real-world performance is a clear sign of overfitting.

More features mean more calculations, more storage, and more processing time. The model needs to handle large matrices, complex operations, and big memory usage.

Training becomes slow, testing becomes slow, and sometimes it may even exceed hardware limits. This makes model development costly and difficult in practice.

Not all features are useful. Some are repetitive, some add noise, and some do not help the model at all. By carefully removing these unwanted features, you reduce the number of dimensions. This makes the data denser and easier for the model to learn from. Feature selection can be done using basic checks like correlation, importance scores, or simple domain understanding.

Instead of using all features directly, we can compress them into fewer meaningful features. Techniques like PCA are commonly used for this. They keep the important information but reduce the total number of dimensions. With fewer dimensions, patterns become clearer, distance measures start making sense again, and learning becomes easier.

High dimensions demand more data. If possible, increasing the dataset size helps fill the empty space created by many features. With more samples, models get enough examples to learn real patterns instead of guessing. This reduces sparsity and improves model stability.

Some models struggle badly in high dimensions, while others can work more effectively.

Algorithms like tree-based models and regularized models usually cope better with many features compared to simple distance-based methods. Choosing the right model helps reduce the negative effects of high dimensionality.

5. Add regularization

Regularization controls model complexity. It prevents the model from giving too much importance to every feature and stops it from memorizing noise. This directly reduces overfitting, which is one of the key problems caused by high dimensions.

6. Create meaningful features instead of many raw ones

Rather than feeding too many raw inputs, try to create cleaner, more informative features. This is called feature engineering. When features are meaningful, you often need fewer of them, and the model learns better patterns with less effort.

7. Remove highly similar or correlated features

Sometimes multiple features say almost the same thing. These duplicate or highly correlated features increase dimensionality without adding value. Removing them reduces complexity and improves model performance.

PCA is one of the most popular dimensionality reduction methods. It takes a large number of features and converts them into a smaller set of new features while keeping most of the important information.

Instead of looking at each feature separately, PCA tries to understand where the biggest variation in the data is happening and focuses on that. In simple words, it compresses the data but keeps the meaning.

This helps models learn faster, reduces noise, and lowers the risk of overfitting, especially when many features are similar or highly related.

This method is mainly used when you want to visualize high-dimensional data on a simple 2D or 3D graph. High-dimensional data is difficult to imagine, so t-SNE helps by arranging similar data points close together and dissimilar ones far apart.

This makes patterns and groups easy to see with the human eye. It is very useful when you want to understand your data, find clusters, or explain results visually. It is more of a visualization tool than a modeling tool, but it is very powerful for understanding complex datasets.

Autoencoders are neural networks built to learn how to compress data and then reconstruct it again. They work in two steps: first, they reduce the data into a smaller, compact form, and then they try to rebuild the original data from this reduced version.

While doing this, they automatically learn which parts of the data are important and which parts can be ignored. Autoencoders are useful for images, audio, text, and other complex data where simple methods may not work well. They are especially helpful when the data has many features and non-linear relationships.

High-dimensional data potentially causes the smartest systems of today but without the appropriate controls, it becomes easy to compromise the performance of the model. Distorted distance measurements, increasing computation costs, overfitting and unstable predictions are real and inevitable threats.

The trick is in the careful feature engineering, intelligent dimensionality reduction and appropriate algorithms to the appropriate data. Even complex datasets can provide scalable results in a good manner when dealt with appropriately. In the case of the curse of dimensionality in machine learning, our professionals can assist you with the audit, optimization, and fine-tuning of your machine learning pipeline to provide high speed, accuracy, and reliability.

The above methods have various applications depending on the size of data, interpretability requirements, and available computer resources.

The dimensionality is the total amount of features that are used to explain a dataset. An example would be a 784 pixel image having 784 dimensions. Increasing dimensionality makes the learning space more complex and has a direct influence on the way relationships are perceived by the algorithms on the data.

It causes sparsity of data, inaccurate distance calculations, increased cost of computation, and severe overfitting. Even the strongest models might not generalize in cases where dimensions and data sample do not match.

KNN, K-means clustering and Support Vector machines are distance-based and density-based algorithms, which are severely affected since they rely on sensible distance measures.

It cannot be completely eliminated yet it can be easily managed with the help of dimensionality reduction, feature selection, regularization, and suitable design of the dataset.