Big data itself is just very large amounts of data created every second in websites, mobile applications, sensors and social media, and business systems.

With increasing data, the traditional tools cannot store, process, and analyze the data effectively. It is against this background that big data technologies are necessary.

Pyspark is a significant inquiry to make the powerful big data processing available to Python users. Even when the traditional tools fail, PySpark assists in processing large amounts of data in a short amount of time through distributed computing.

What Is PySpark?

Pyspark can be defined as the python interface of Apache Spark. Apache Spark is an efficient engine to process big data and Python can be used as a language to access Spark capabilities, which is provided through Python as PySpark.

Python developers do not have to learn complicated languages such as Scala, but instead can code small PySpark programs to work with big data.

A common use of PySpark is in the field of data processing due to Spark, combining the simplicity of Python with the speed, scalability, and distributed computation capabilities.

Why Is PySpark Needed?

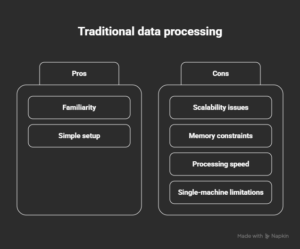

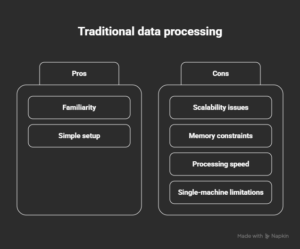

The traditional data processing tools will soon start to exhibit severe drawbacks as the size of the data grows.

The majority of Python libraries have been created to work on a single machine making them unsuitable to work with very large datasets.

- Limitations of Pandas and traditional tools: Pandas operates in-memory on a single system thus making it slow or inapplicable to large data.

- Single-machine challenges: Huge workloads will consist of low memory, slow processing and crashes.

- Lack of scalability: The traditional tools are not easily scalable or expandable with an increase in data.

- Need for distributed processing: Businesses have a need of tools that would split the data between a set of machines.

Key Features of PySpark

PySpark offers a number of advantages which render it appropriate in processing of big data.

- Distributed data processing: PySpark makes use of multiple machines to process their data and hence process data that is significantly large without collapsing a single machine.

- In-memory computation: Information is saved in memory where feasible as opposed to a disk disk, which means that processing is far quicker than that of a traditional disk-based system.

- Fault tolerance: In case one of the machines fails in processing, the PySpark is able to recover the lost information and re-execution is performed without the loss of information.

- Easy Python APIs: Python interfaces are simple to learn and write big data code.

- Handles both structured and unstructured data: PySpark supports tables, logs, text files, and JSON, CSV, and other data structures.

Why Do Developers use PySpark? Benefits of Using PySpark

PySpark has a number of feasible advantages that have predisposed it to be a favorite in big data processing. These benefits can assist businesses and developers to work with large datasets effectively without losing their performance and scalability.

- Handles Massive Datasets Efficiently

PySpark can handle very large amounts of data that cannot be handled using traditional tools. Rather than storing all the data in the memory of one machine, it stores data in more than one system. This enables PySpark to process terabytes or even petabytes of data without slowness and crashing.

- Faster Data Processing

PySpark has one of the largest benefits in terms of speed. It works in parallel on multiple computers, which means that the tasks can be carried out at the same time. In-memory computation is also a feature of PySpark which saves a lot of time on reading and writing data on disk hence making operations quickly.

- Reduced Infrastructure and Processing Costs

PySpark is an efficient way to distribute workloads through systems; hence, optimizing resource utilization. Commodity hardware gives businesses the ability to run large datasets rather than costly but high-end machines. This saves on cost of infrastructure at large since it does not compromise performance or reliability.

- Beginner-Friendly for Python Developers

PySpark enables the Python developers to operate with the big data without the need to acquire the complex language of programming. Its syntax resembles that of standard Python and is more accessible to learners, as well as to write code. This reduces the learning curve and accelerates the adoption.

- Strong Open-Source Community and Ecosystem

PySpark is free as well as supported by a vast international community. Constant upgrades, wide documentation and robust community support, make sure that improvements, bug fixes and reliability continue. Learning resources and solutions to common problems can be easily located by the developers.

Common Use Cases of PySpark

PySpark is applied in industries in numerous data-driven tasks.

- Big data analytics and reporting: Processes of large data to determine trends, patterns and business insights.

- Data pipelines and cleaning: Converting raw data into the analysis and storage format.

- Machine learning preparation: Scale and prepare huge datasets to be used in training machine learning models.

- Real-time data processing: Process real-time sensor, application and online data.

- Log analysis and validation: Scale-based monitoring and security of applications and system logs.

- Business intelligence: Fast data processing with business data warehouses and analytics environments.

Conclusion

PySpark bridges the gap between Python simplicity and big data scalability. It enables the developers to handle large volumes of data in a quick, reliable, and cost-saving way.

It is vital to Understanding what is pyspark is because every individual who is either in data analytics, data engineering, or machine learning wishes to effectively address the current issues of the modern world of business.

FAQs

1. Is PySpark good for beginners with Python?

Yes, Python users with bare minimal knowledge about programming can easily work with PySpark.

2. How does PySpark differ with Pandas?

Pandas makes use of only one machine whereas PySpark makes use of several machines.

3. Is it possible to process real-time data using Spark?

Yes, PySpark has real-time streaming data processing.

4. Is the usage of PySpark common in the industry?

Yes, the PySpark is very popular in industries in big data analytics.